(Written by Human)

In a coffee catch-up with your friend, you hear that the world is undergoing significant changes. AI is poised to revolutionize the way we work, develop applications, and support features in products. Shortly after, you receive a call from someone researching ChatGPT. Turning on the news, you find them discussing ChatGPT as well. Once at the office, you learn about numerous tech initiatives centered around AI. It’s evident from all these sources that ChatGPT and AI are becoming prominent forces in our lives. The evolution of AI is often likened to the transformation brought about by computers and programming. Just as computers changed the world, AI is expected to reshape it too.

The research on AI has been ongoing for quite some time, possibly spanning more than a couple of decades. However, real democratization and widespread awareness of AI have surged with the advent of ChatGPT. As a generative AI, ChatGPT exhibits human-like capabilities that encompass answering questions, generating artwork, producing code, and much more. This remarkable potential sparks a question in your mind as a technical person: Can you develop something similar for your own application? Intrigued by the buzz surrounding AI, you embark on an exploration to delve deeper into the possibilities it holds.

If you’re interested in developing an application with AI, you have a few choices:

1. Develop your own model: This involves building your own code and delving into the intricacies of Machine Learning. It’s akin to creating a program that can store, learn, and process human knowledge. Models like RNN and CNN have been extensively researched, while the Transformer model has been groundbreaking in changing the way we learn. However, building a Machine Learning model is a research-intensive task, especially if you aim to create something as complex as the Transformer model. If you’re primarily focused on application development, this option might not be the best fit.

2. Train an existing model: This option entails feeding knowledge to a pre-existing model, which then stores this knowledge for future use. You can ask questions based on the trained knowledge and validate if the model produces the expected results. It’s like having a new human brain that’s ready to learn anything. However, this task requires a solid understanding of Machine Learning, including model deployment, data feeding for training, and monitoring responses. It builds on option #1 and demands an immense amount of data and powerful processing capabilities. For instance, the ChatGPT 3.5 (free version) you use was trained on 570GB of text data, utilizing powerful GPUs and numerous processors over several years, with knowledge frozen as of September 2021. So, if you opt for this path, be prepared for significant data, processing, and time requirements. This might also not be ideal if you’re primarily focused on application development.

3. Choose the RAG option: If you aim to achieve ChatGPT-like interactions that are contextually aware of your knowledge, application, and more, the Retrieval-Augmented Generation (RAG) approach might be the best fit. This approach combines the benefits of retrieval and generative models, allowing for more informed and contextually relevant responses.

Retrieval-Augmented Generation (RAG) is an architecture that empowers you to leverage the capabilities of modern-day machine learning and tailor them to meet your application requirements, delivering optimal solutions to customers. In the rapidly evolving AI landscape, RAG stands as a powerful model (though predicting the next three months is challenging). Major tech giants like Microsoft, AWS, and Google heavily invest in RAG due to its potential. It offers an ideal blend of AI capabilities and context-specific knowledge.

The overall approach is simple, requiring three key components:

1. Vector Database: Regular RDBS and NoSQL databases may not suffice for storing knowledge to be handled by machine learning. Hence, a specialized vector database is necessary (to be covered in the next blog).

2. ChatGPT API Endpoint: This could be any model you wish to interact with, such as the OpenAI API interface or Azure-deployed OpenAI models. As long as you can interact with the API, it works effectively (to be covered in an upcoming blog).

3. Application to Glue It All Together: You need an application developed in any programming language that will serve as the face of your application. You have the freedom to choose UI and backend technologies according to your preferences (to be covered in the last blog).

That’s all.

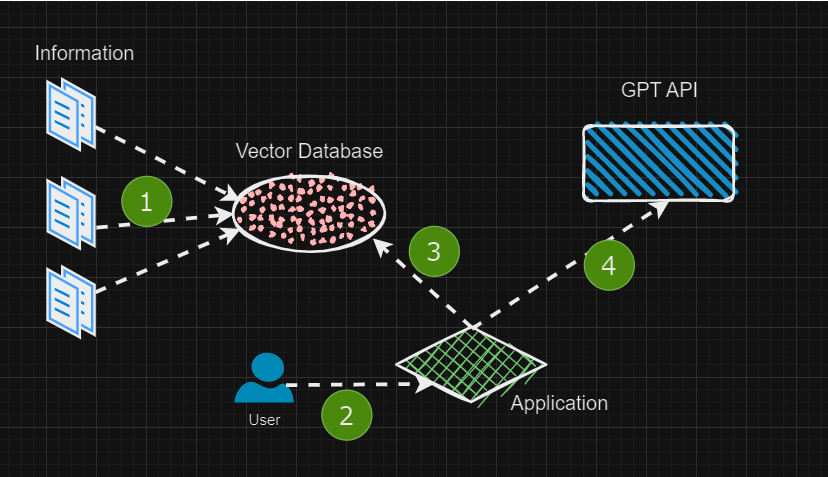

Below is the high-level architecture of this entire RAG solution.

Below is the flow of your application development:

1. Gather all your information, including documents and knowledge bases, and store it in a Vector Database. This step ensures that all the information is readily available in the Vector Database.

2. When a user asks a question related to the stored information, your application needs to process the query.

3. The application first sends the user’s question to the Vector Database to retrieve all relevant content related to the query.

4. With the information from the Vector Database, the application builds a context and sends it to the GPT API to obtain a response. The response is then directed back to the user in a ChatGPT-like interaction.

RAG is an incredibly powerful model that allows you to harness the full potential of AI within the context of your application. Throughout this series, we will cover every aspect of this solution. Stay tuned for the next installment. If you have any queries related to this, feel free to reach out to me.

Happy AI! 😊

(Review Help From Machine)

One of the best posts on AI and is explained in simple language

Good article. Thanks for sharing