Welcome to the blog on the Bulkhead Design Pattern.

Introduction

Historically IT was supposed to be a backend operation and it was assumed that business can run without it. And it was never assumed to be a mission-critical part of the business apart from a few industries like airlines, medical, etc. With the latest COVID pandemic when entire humankind was facing the challenge of survival, IT became mission-critical for many day-to-day operations. Starting from grocery delivery to working in a remote environment.

As IT systems became mission-critical, resiliency became one of the critical factors for the success of the IT systems. Resiliency refers to the ability of a system to function correctly in the face of unexpected events. These unexpected events should be planned as part of Architecture so that if it happens in an actual production environment, we know how to handle these. Various resiliency patterns are available to be used when we are building a robust application. As part of this blog, we will explore Bulkead Design Pattern.

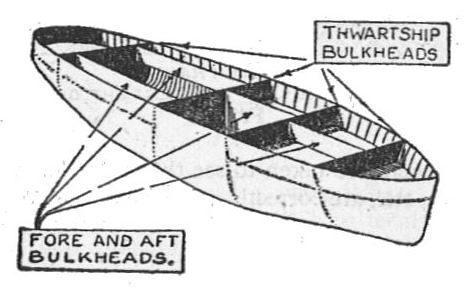

The bulkhead pattern has come from the shipbuilding industry. While building the ships, there are partitions or walls created in the hull. These partitions help in protecting the ship by containing the damage to a limited area. If any one of these partitions is flooded because of leakage, the damage is limited within this partition and it doesn’t let the ship sink.

The bulkhead resiliency pattern helps in a similar way to contain the impact of a faulty operation within a limited area. This helps other parts of the application to operate normally when one of the areas is impacted by faulty code or faulty dependencies.

Let’s start understanding this entire concept using one example. I have developed this example in ASP.Net Core WebAPI, this concept is not limited and restricted to ASP.Net technology. You can use other technical stacks and also implement the same concept.

Code Base

The code base used in this blog is posted under GitHub and you can download it from there.

https://github.com/akumaramar/bulkhead

Tools Used

- Visual Studio Code – Code Editor

- K6 – Developer Performance Testing Tool

- Polly – Library for resiliency

The flow of Application

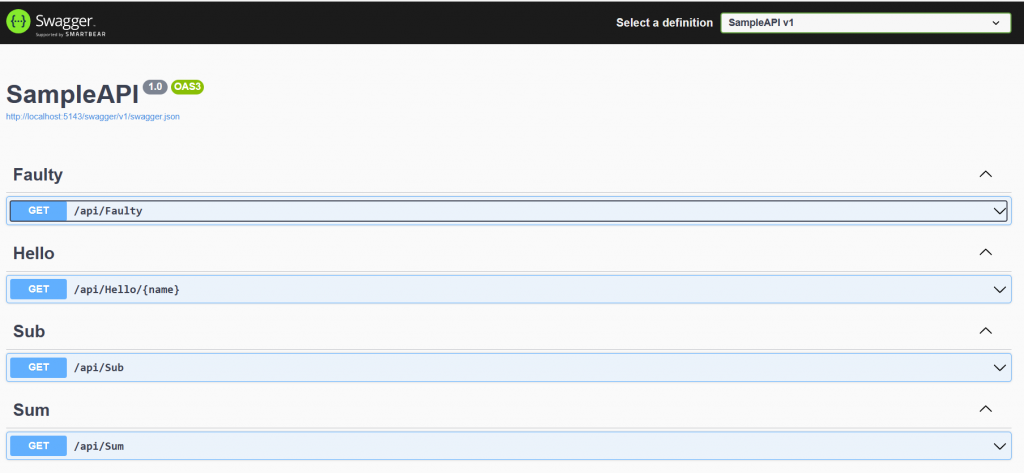

The SampleAPI application is composed of four controllers. These controllers have the below functionality.

- FaultyController – This controller uses the code which engages the CPU for a certain time and spikes it as it requires a lot of computation.

- SumController – This Controller provides a simple sum of two numbers.

- SubController – This Controller provides a simple subtraction of two numbers.

- HelloController – This Controller provides a hello to sent request.

As the first step, we need to open the SampleAPI application in Visual Studio Code. Then you can start running the application with F5. Once the application is up and running, you can open the Swagger to check all these APIs to understand how they are behaving.

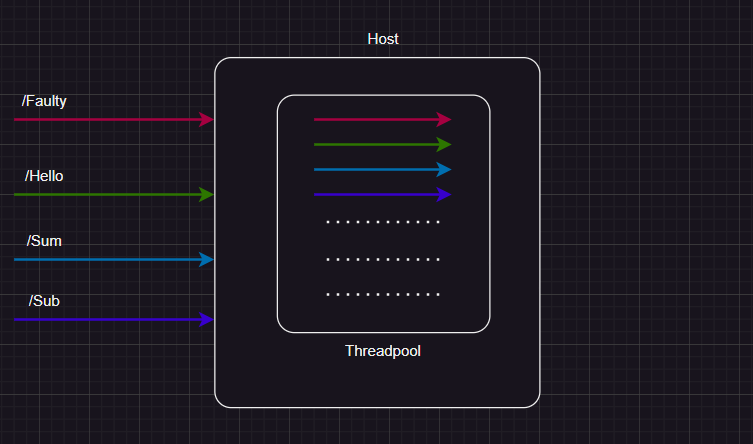

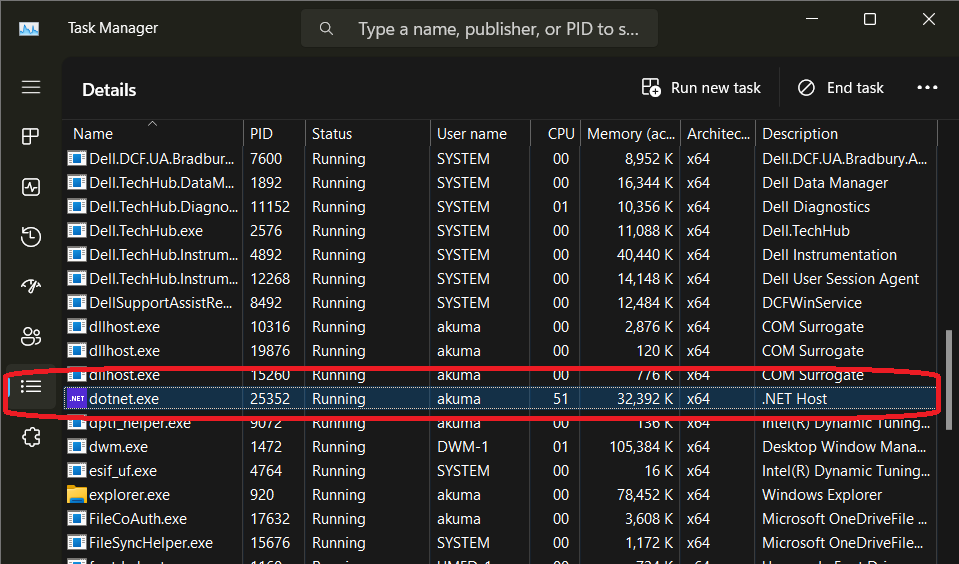

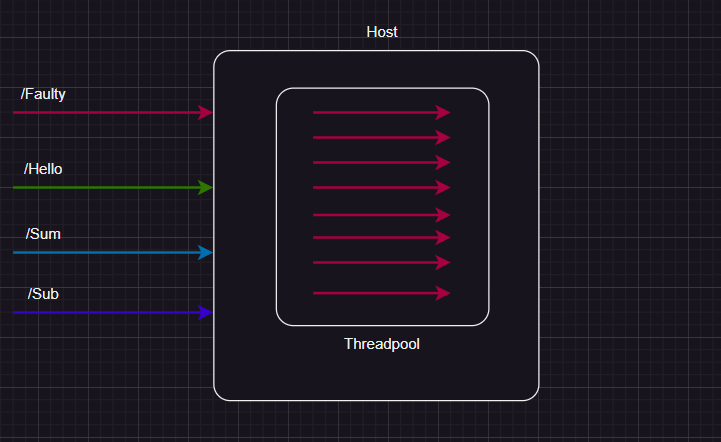

You can see the below diagram to understand the expected behavior and how the host machines and ThreadPool would be engaged in serving the operation. In a healthy situation, all the requests should be split across available ThreadPool to handle all the requests.

As more requests will arrive at the application, it should get distributed and the application should work as expected. However, this doesn’t happen in the current situation as we have one interesting code written in FualtyController. This code engages the CPU by using a Square root operation starting from 100K. This operation runs for around 2 seconds. During this period the CPU is fully engaged in this computational operation. Below is the code block and you can refer to the FualtyCode function.

namespace TodoApi.Controllers

{

[Route("api/[controller]")]

[ApiController]

public class FaultyController : ControllerBase

{

[HttpGet(Name = "GetFaulty")]

public String GetAllData(String name){

return FaultyCode();

}

// This code causes the CPU to spike a lot

private string FaultyCode(){

DateTime timeAfterFiveSec = DateTime.Now.AddSeconds(2);

// perform a computationally intensive task

while (DateTime.Now < timeAfterFiveSec)

{

double result = 0;

for (int i = 1; i < 100000; i++)

{

result += Math.Sqrt(i);

}

}

return "Time's up!";

}

}

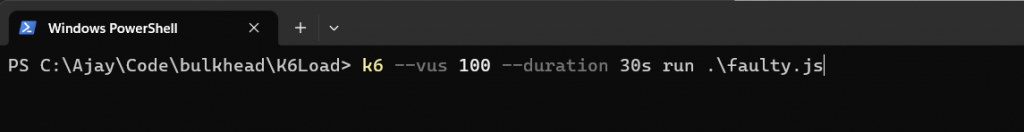

}Let us simulate the load on this entire application using the K6 load runner. This is a very nice tool that you can use in the development environment to simulate user load. We are going to load the FaultyController with 100 users for 30 seconds and check how our CPU is behaving. The sample code to generate the load is kept under the K6Load folder. It has a code base for loading all routes of the application. Below is the codebase for the load Faulty endpoint.

import http from "k6/http";

import { check } from "k6";

export default function() {

let res = http.get("http://localhost:5143/api/Faulty?name=Hello");

check(res, {

"status is 200": (r) => r.status === 200

});

}You can see we are making a request to “/Fauty” and checking for a response if we are getting 200 also not. Below is a sample command line you can use to run the load for this.

As you will start running the load at this endpoint, you can see how your CPU is spiking high for dotnet.exe. The spike will continue for the 30s.

During this period if you try to check the response time of other endpoints (use other files in the K6Load folder and then run it through the command line), you will find a significant degradation in overall response time along with failure rate. What is happening here is that the ThreadPool on the server is getting occupied with Faulty requests and there is bandwidth to handle general requests. This situation is really bad for your production environment as it can bring down your entire application.

To build resiliency in the application we need to restrict how many threads can be occupied by Faulty calls. The bulkhead design pattern will come to the rescue during this situation. Below is the sample syntax of Bulkhead using the Polly library.

BulkheadPolicy bulkhead = Policy

.Bulkhead(int maxParallelization

[, int maxQueuingActions]

[, Action<Context> onBulkheadRejected]);

BulkheadPolicy bulkhead = Policy

.BulkheadAsync(int maxParallelization

[, int maxQueuingActions]

[, Func<Context, Task> onBulkheadRejectedAsync]);

- maxParallelization – This variable controls how many parallel requests would be served for this policy.

- maxQueuingActions – This variable controls how many requests would be queued when the current threshold is reached to accept the number of requests.

We will be using the above policy to change our FaultyController to control the number of requests it can handle and also how many to queue. Below is the updated code with this Bulkhead resiliency.

using Microsoft.AspNetCore.Mvc;

using Polly;

using Polly.Bulkhead;

using System.Diagnostics;

namespace TodoApi.Controllers

{

[Route("api/[controller]")]

[ApiController]

public class FaultyController : ControllerBase

{

// Policy of the Bulhead

private static BulkheadPolicy bulkHeadPolicy = Policy

.Bulkhead(2, 2, context => {

Debug.WriteLine("More than 2 parallel calls are rejected");

});

[HttpGet(Name = "GetFaulty")]

public String GetAllData(String name){

// Wrap the code under the policy

return bulkHeadPolicy.ExecuteAndCapture(() => FaultyCode()).Result;

}

// This code causes the CPU to spike a lot

private string FaultyCode(){

DateTime timeAfterFiveSec = DateTime.Now.AddSeconds(2);

// perform a computationally intensive task

while (DateTime.Now < timeAfterFiveSec)

{

double result = 0;

for (int i = 1; i < 100000; i++)

{

result += Math.Sqrt(i);

}

}

return "Time's up!";

}

}

}

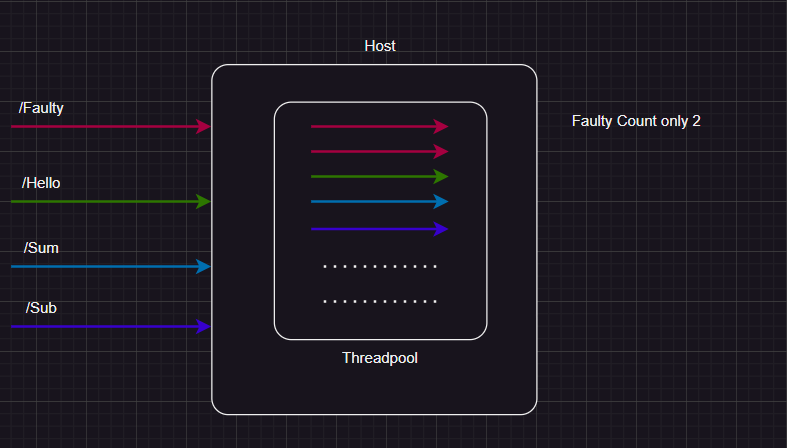

You can refer to line #13 where we are adding a policy to accept only 2 parallel requests and also wait for only 2 in the queue. If there are more than this number of requests, you will see in line # 15 we are saying that “More than 2 parallel calls are rejected”.

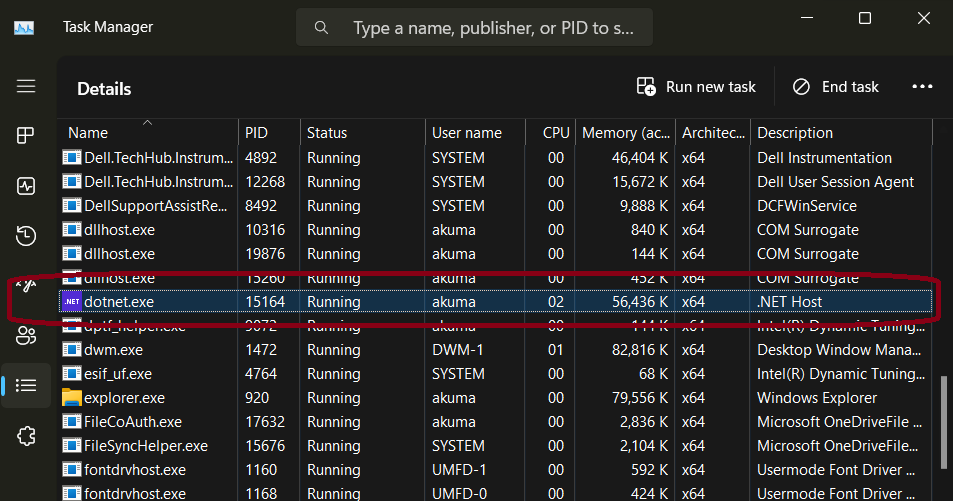

Let’s run this code and see how the CPU is behaving. This can be achieved by using the K6 command as mentioned above. You can see how the CPU is not going beyond a certain level and it is available to handle other requests.

And if you try to simulate other loads, it will reach to below situation where only 2 parallel requests are being served for Faulty and other requests continue to be handled without any disturbance. The below diagram is the representation of that situation.

Conclusion

The resiliency is important aspect of design. We need to consider that from day one of the development. Bulkhead can help you to compartmentalize the issue and let it spread across your entire deployment.

Happy Designing Resilient Systems. Feel free to reach out to me.