(Disclaimer: Written by Human with proof reading by AI. Code by Human as coding assistants are refering older API version when this blog was written 🙂 . Architectural digrams are also from Human.)

Everyone is talking about Model Context Protocol (MCP). It’s rocking the industry, and everyone wants to understand what the heck this MCP is. In the next 15 minutes, I will tell you why you should really care about MCP.

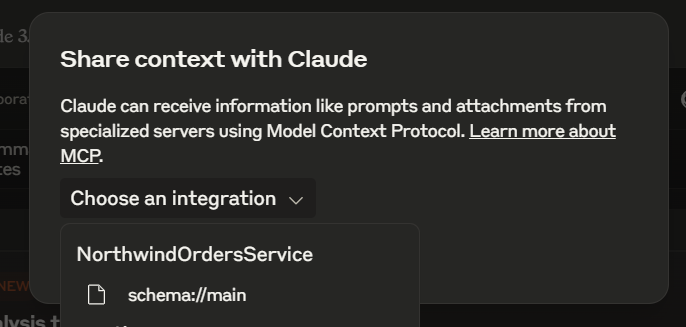

Should you put your energy into learning MCP in an ever-changing AI landscape where tools and technologies become outdated every 15 to 30 days? In this blog, I will cover why MCP is so important, along with an example scenario where we build an SQL query tool using the MCP Python SDK, Claude Desktop, PostgreSQL, and Northwind DB to query Customer Orders. But this is not limited to customer-related queries—you can ask any kind of query to this tool. It will run on your desktop and become a valuable assistant in your day-to-day job. Using the same concept, you can build other MCP servers that provide tools and resources to assist with daily tasks. You can also leverage this for customer solutions to increase the value of AI-powered applications.

Let’s get started.

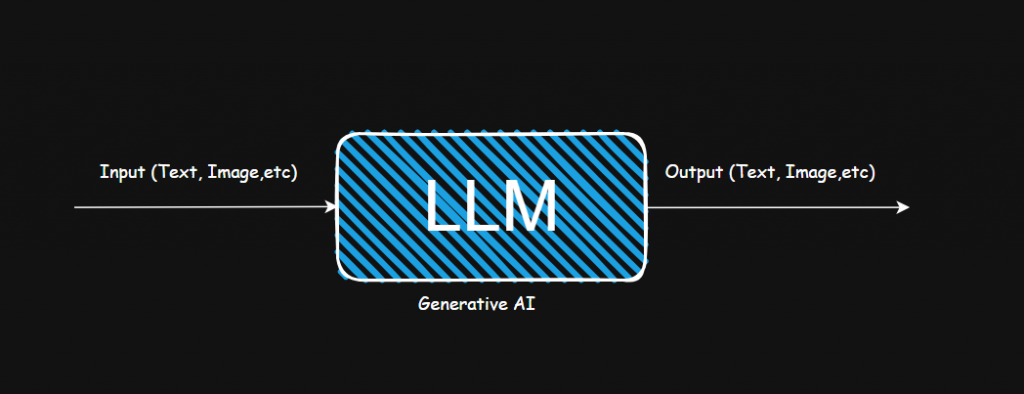

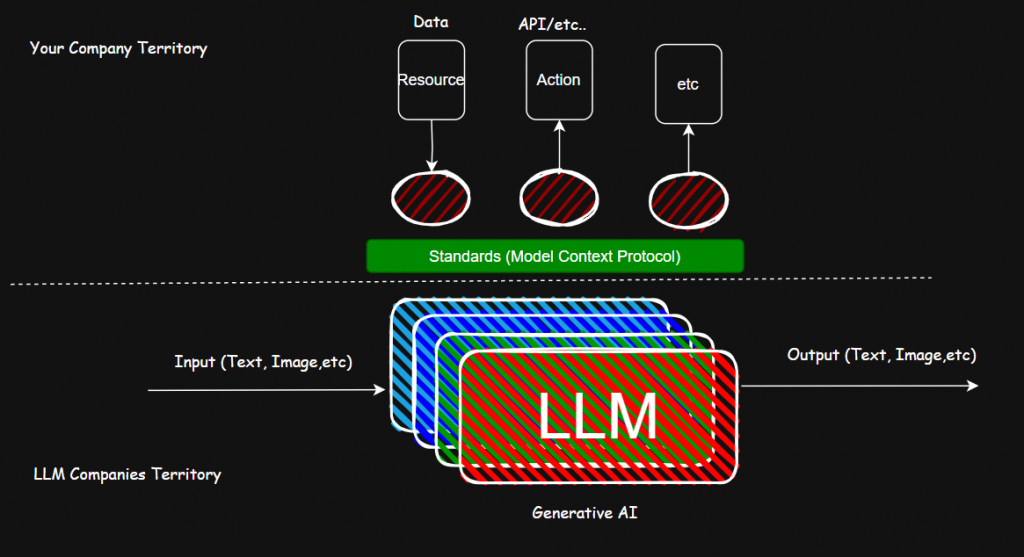

The story begins with LLMs, which promise to change the world and impact every job on the planet. However, if you look closely, these LLMs are Generative AIs. Their job is to predict the most probable token (word, phrase, etc.) based on the input provided. They excel at this because they have been trained on vast amounts of world knowledge. Given an input, they generate the most probable output. They are fantastic at what they do.

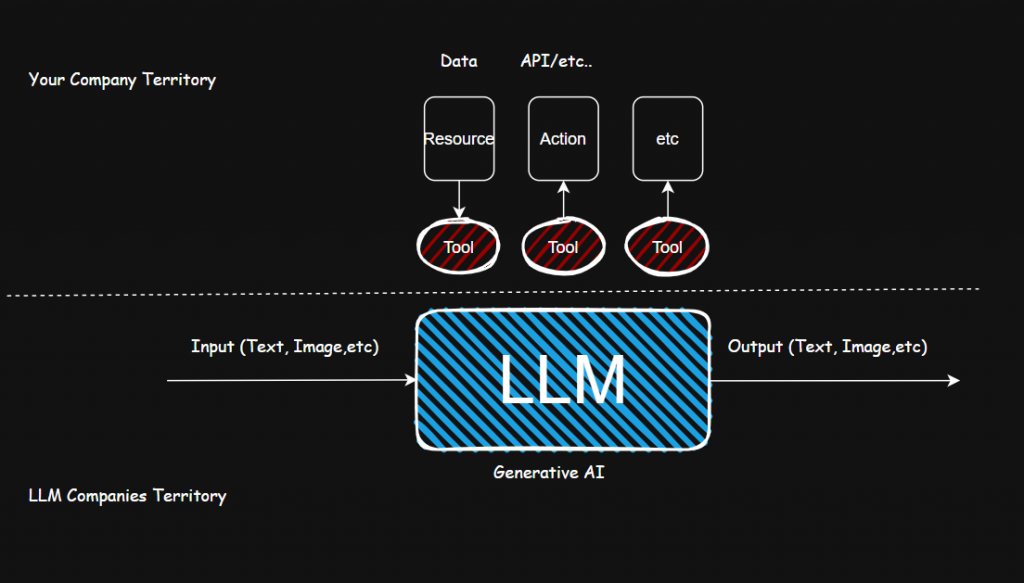

However, to solve real-world problems, LLMs need two additional capabilities:

- It’s great to have world knowledge, but can you work on my proprietary knowledge?

- Can you take some action based on the output?

These two capabilities make LLMs truly useful in real-world scenarios—exactly what our software does every day. These features have been added through two key techniques:

- Retrieval-Augmented Generation (RAG): Provides the necessary context.

- Action Hooks: Enables execution of specific actions.

With RAG, you can provide problem-specific context, and with action hooks, you can execute functions like API calls, database operations, writing to files, and more. These two features make LLM-based solutions extremely powerful. The context could be anything—text files, images, databases, etc. The tools could be API calls, DB insert execution, file writing, and more.

We started integrating these enhancements, and our LLMs became very powerful.

The Twist

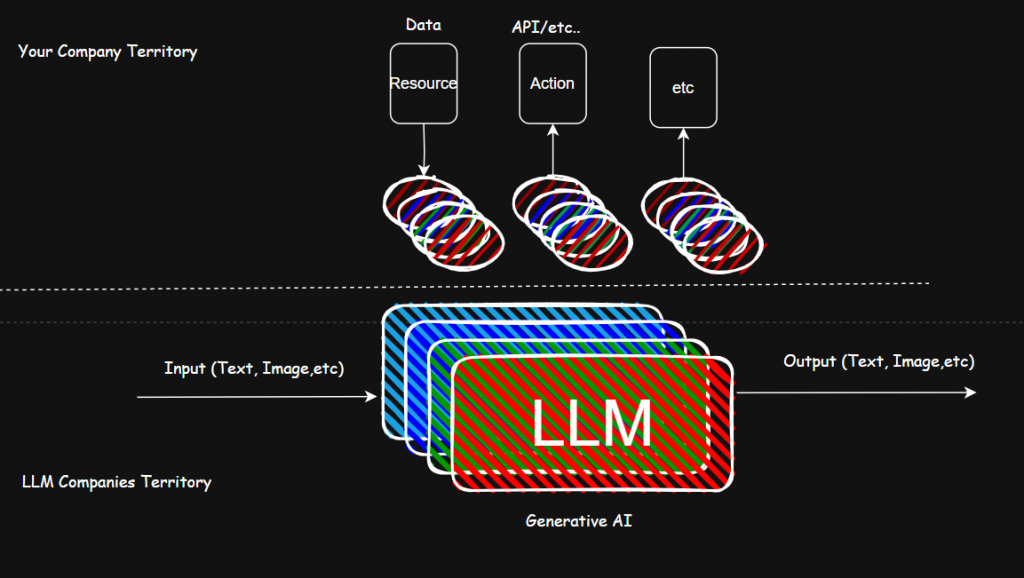

Every day/week, we hear that a new, better model has been released—more accurate, faster, and cheaper. This forces us to change models constantly. We try to make our applications loosely coupled so they don’t depend on a single LLM. However, methods for providing context, integrating API calls, and internal details keep changing, requiring constant adaptation.

In fact, there is no standard way to provide context and tools to all types of LLMs. This created an urgent need for a standardized approach to providing context to LLMs so that every LLM could receive context through predefined methods, without requiring changes every time a new model appears.

Enter Model Context Protocol (MCP)

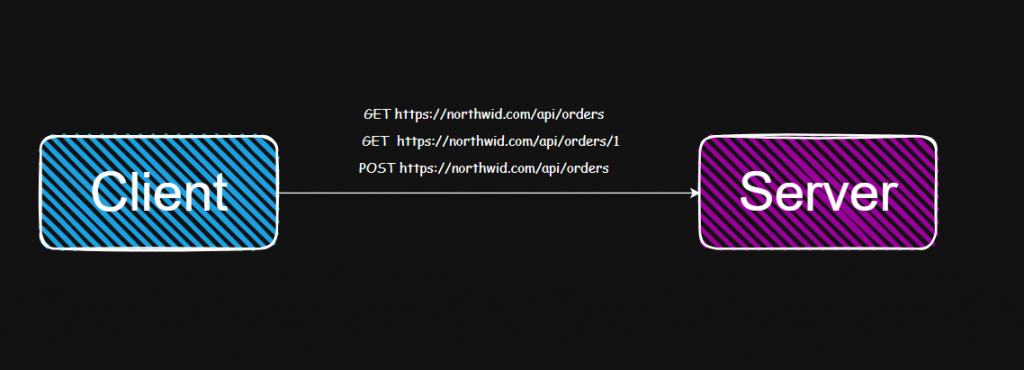

Think of REST API standards—anyone building a REST API server knows that a 200 response code means success. This standardization allows servers and clients to interact seamlessly, regardless of implementation details.

Similarly, MCP defines a standard way to provide context and integrate tools with LLMs. This protocol, developed by Anthropic, aims to standardize how we supply context and actions to AI models.

Will MCP become the industry standard? Nobody knows. But as of today, it’s the most promising approach, and it works really well.

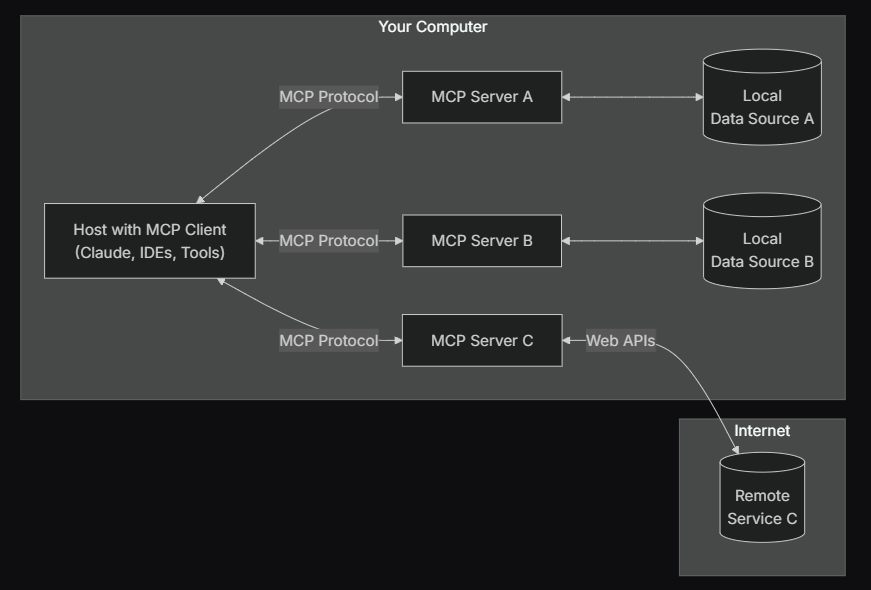

Below is high level architecture from the site of MCP with their components.

- MCP Hosts: Programs like Claude Desktop, IDEs, or AI tools that want to access data through MCP

- MCP Clients: Protocol clients that maintain 1:1 connections with servers

- MCP Servers: Lightweight programs that each expose specific capabilities through the standardized Model Context Protocol

- Local Data Sources: Your computer’s files, databases, and services that MCP servers can securely access

- Remote Services: External systems available over the internet (e.g., through APIs) that MCP servers can connect to

Let’s Code

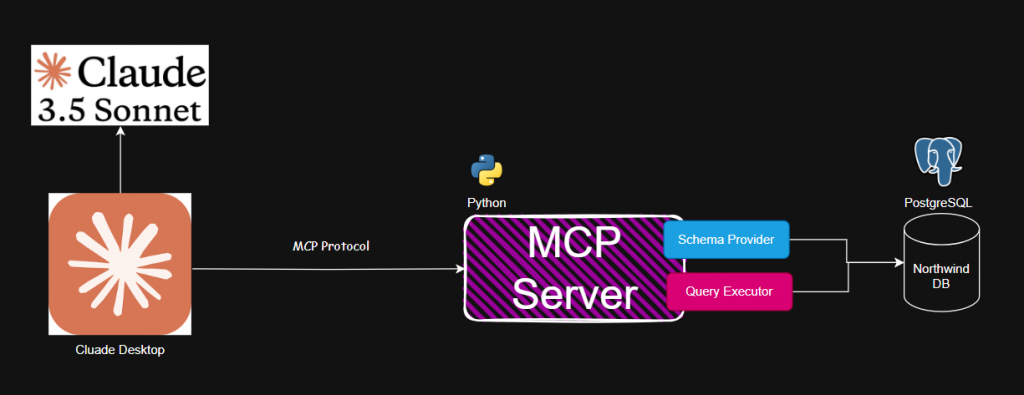

Now, let’s build an AI assistant that understands the database schema and executes queries to provide answers to key business questions. Below is the high-level architecture and design of our project.

The entire codebase is written in Python. Let’s setup the project

Step 1: Setup the Codebase

# Init it

uv init sampleserver

cd sampleserver

# Create Environment

uv venv

# Add dependencies

uv add mcp[cli] httpx

# Create File

new-item sample_server.py

# Open Visual Studio Code

code .Open the project in VS Code. Ensure you have Claude Desktop installed. Download it here: Claude Desktop (free version available).

For this experiment, we use Northwind DB, a well-known sample database.

Step 2: Implement the Server

from typing import Any, List

import asyncpg

from mcp.server import FastMCP

print("Starting Northwind Orders MCP Server...")

# Initialize the FastMCP server

mcp = FastMCP("NorthwindOrdersService")

# Database configuration

DB_CONFIG = {

"host": "localhost",

"port": "5432",

"database": "northwind",

"user": "postgres",

"password": "abc-123"

}

# Database connection pool

db_pool: asyncpg.Pool | None = None

async def init_db_pool():

"""Initialize the database connection pool."""

global db_pool

try:

db_pool = await asyncpg.create_pool(**DB_CONFIG)

print("Successfully connected to Northwind database")

except Exception as e:

print(f"Error connecting to database: {e}")

raise

async def get_db():

"""Get database connection from pool."""

if db_pool is None:

await init_db_pool()

return db_pool

# Provide the schema of db as resource

@mcp.resource("schema://main")

async def get_schema() ->str:

"""Get the schema of the Northwind database after connecting to DB."""

try:

pool = await get_db()

async with pool.acquire() as conn:

query = """

SELECT table_name, column_name, data_type

FROM information_schema.columns

WHERE table_schema = 'public'

ORDER BY table_name, ordinal_position

"""

rows = await conn.fetch(query)

schema = {row['table_name']: [] for row in rows}

return "\n".join(sql[0] for sql in schema if sql[0])

except Exception as e:

print(f"Error fetching schema: {e}")

return f"Error: {str(e)}"

# Execute the query query and return the result

@mcp.tool()

async def execute_query(query: str) -> str:

"""Execute a SQL query and return the result."""

try:

pool = await get_db()

async with pool.acquire() as conn:

result = await conn.fetch(query)

return str(result)

except Exception as e:

print(f"Error executing query: {e}")

return f"Error: {str(e)}"

# Run the FastMCP server

if __name__ == "__main__":

mcp.run(transport='stdio')

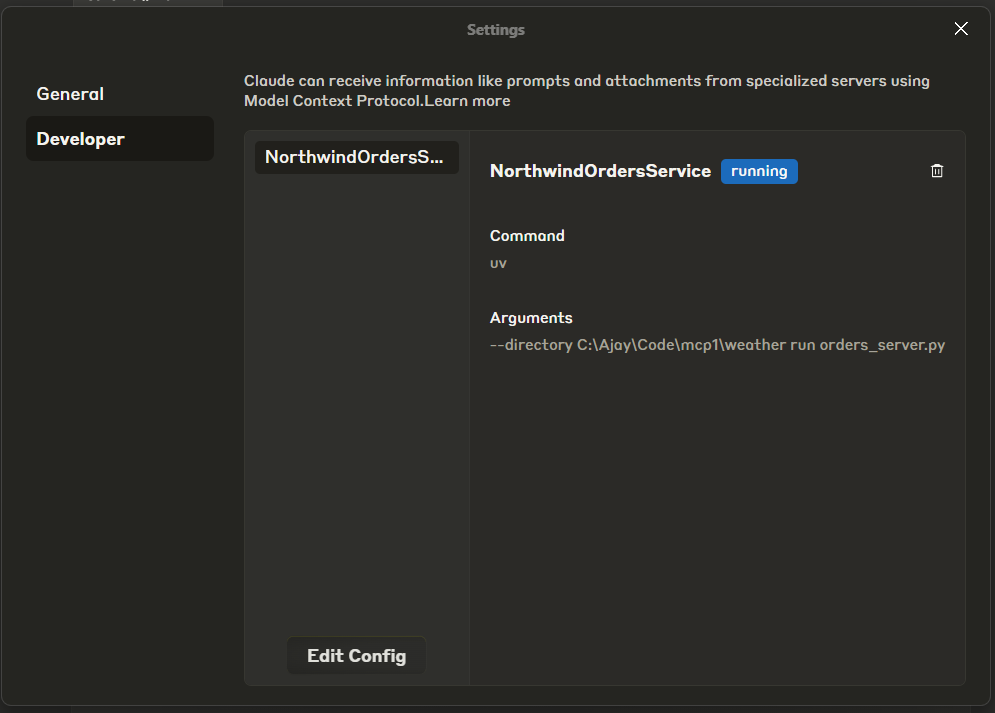

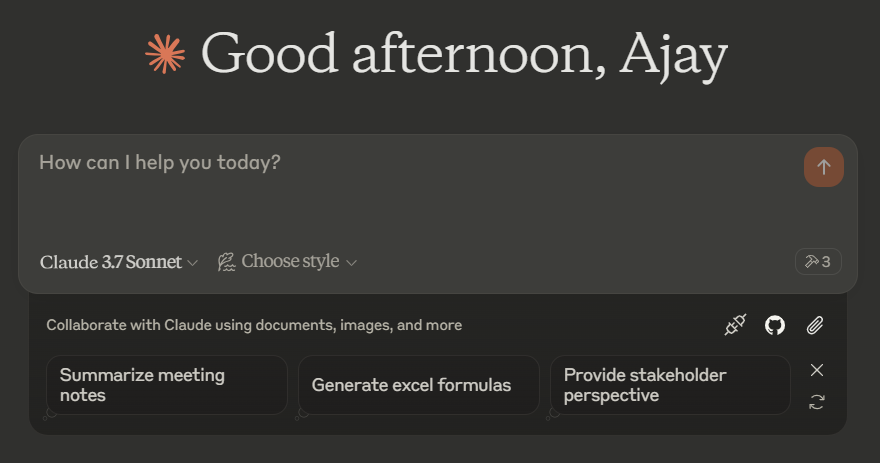

Now, we need to configure Claude Desktop to connect to our server.

- Open Claude Desktop

- Go to File → Settings → Developer

- Click Edit Config (opens a JSON file)

Add the following configuration:

{

"mcpServers": {

"NorthwindOrdersService": {

"command": "uv",

"args": [

"--directory",

"C:\\Ajay\\Code\\mcp1\\weather",

"run",

"sample_server.py"

]

}

}

}

Make sure to update the path (C:\\Ajay\\Code\\mcp1\\weather) to your correct project directory.

Step 3: Restart and Test

- Relaunch Claude Desktop

- If the server doesn’t appear, kill the process and restart it

- Once running, you should see the server listed in the UI

Step 4: Interact with MCP

Click the symbol to select the context, then choose NorthwindOrderService.

Next, check the available tools. You should see execute_query listed.

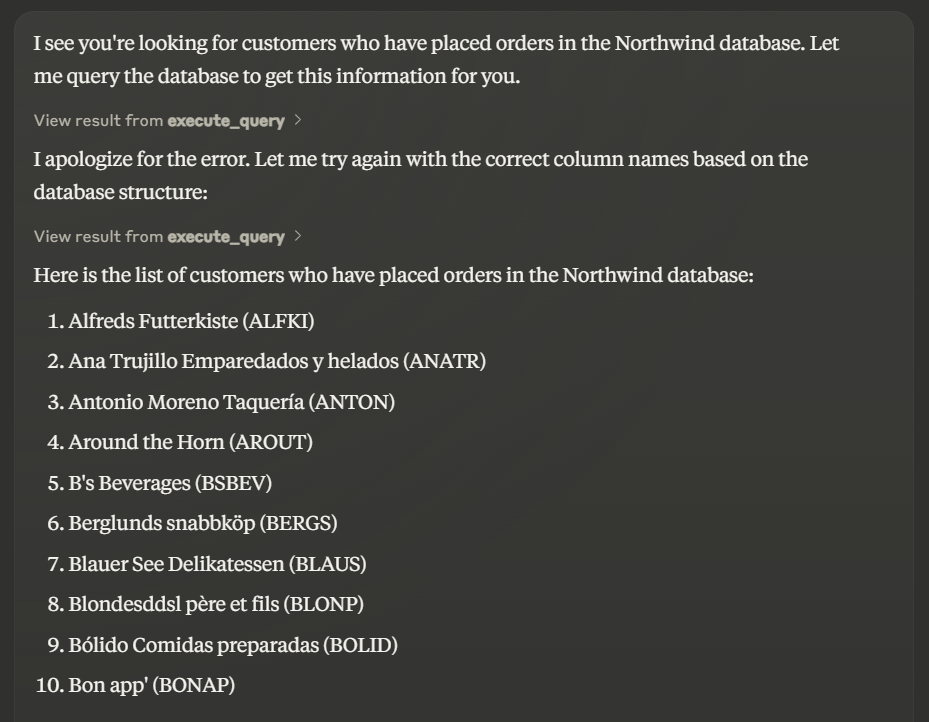

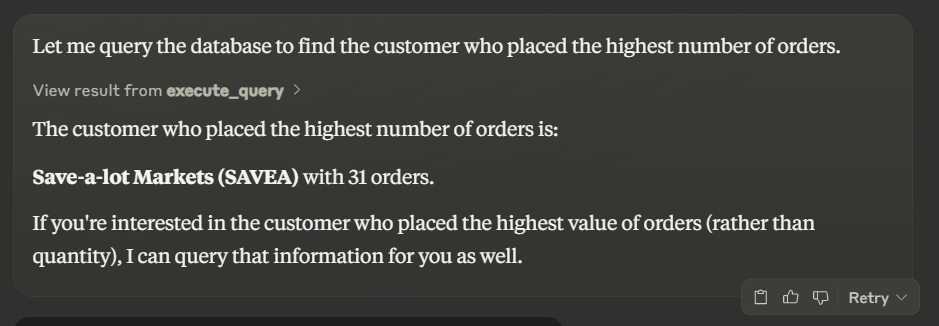

Now, let’s test it with some queries:

Prompt 1: Give me a list of customers who have placed orders.

Prompt 2: Who placed the highest order

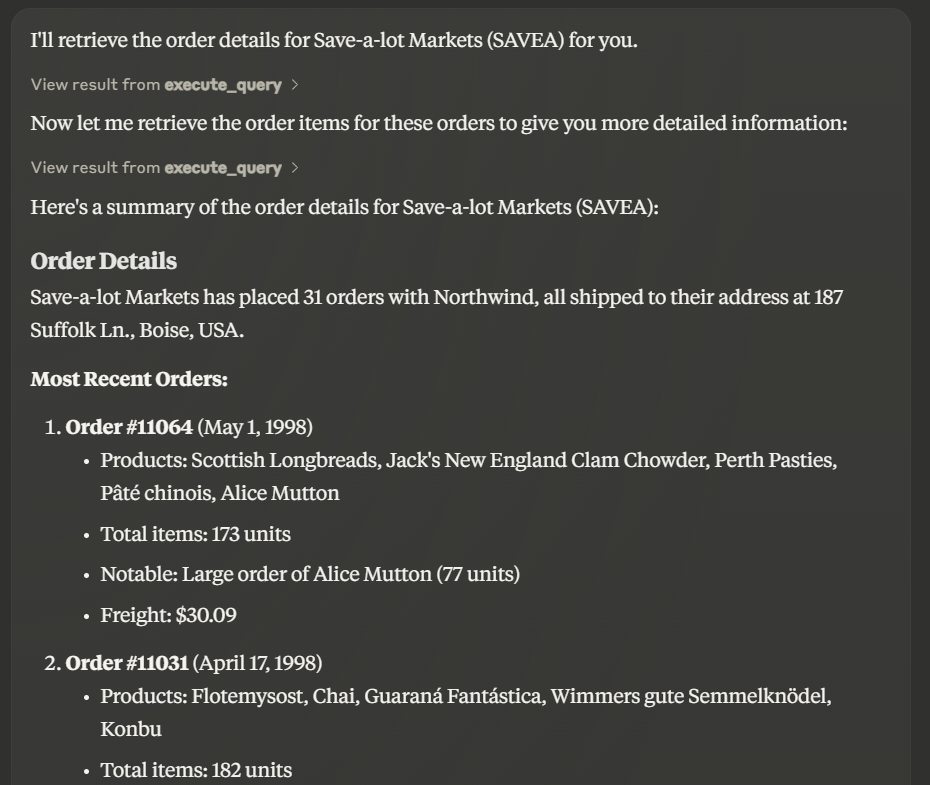

Prompt 3: Can you provide me order details from this customer

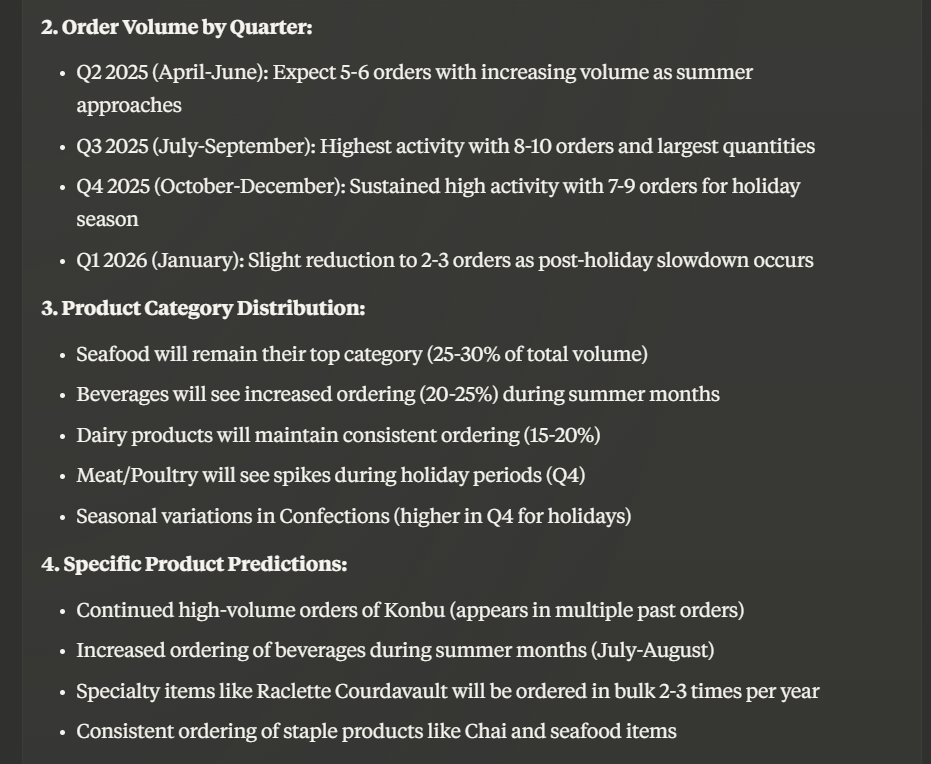

Prompt 4: Can you do some prediction on what could be the order pattern for this customer in next 10 months

Conclusion: Why MCP Matters

The Model Context Protocol (MCP) is a game-changer in AI development. It provides a standardized way to integrate context and tools, making it easier to work with multiple LLMs without rewriting integration logic every time a new model arrives.

We have built a practical AI assistant using MCP, which allows us to query Northwind DB effortlessly. But this is just the beginning. The same approach can be applied to various domains, from financial analysis to customer support automation.

Will MCP become the industry standard? Time will tell. But for now, if you’re working with LLMs, you should absolutely start experimenting with MCP. It might just be the foundation of the next AI revolution. 🚀

References

https://modelcontextprotocol.io/introduction